Industry

Highlights

- Physical AI marks the creation of next-generation robotics with action-based artificial intelligence in autonomous mobile robots, autonomous guided vehicles, drones, mobile manipulator arms, quadrupeds, and humanoids.

- By continuously cycling data between digital and physical realms, the physical AI framework ensures ongoing learning and improvement, ultimately bridging the gap between simulation and real-world execution.

- As machines evolve to perceive, learn, and interact with their surroundings in real time, the boundaries between digital and physical realms will blur, ushering in a future where automation is not just smart but seamlessly integrated into daily life.

On this page

Unveiling physical AI: The next frontier in robotics and AGI

Artificial intelligence (AI) is moving beyond digital applications into the physical world through physical AI.

Here, intelligent robots perceive, reason, and interact with their environments, potentially paving the way for the holy grail of AI – artificial general intelligence (AGI).

Earlier this year, at CES 2025, NVIDIA emphasized this shift, stating, "Physical AI will revolutionize the $50 trillion manufacturing and logistics industries. Everything that moves—from cars and trucks to factories and warehouses—will be robotic and embodied by AI."

A key driver of this transformation is the advent of software-defined robots (SDRs)—machines governed by adaptable AI models rather than static and procedural programming. These robots leverage advanced machine learning, large language models (LLMs), and vision-language models (VLMs) that can provide them with thinking and reasoning prowess and computer vision and 3D lidar sensing to navigate complex environments, have situational awareness, make real-time decisions, and learn and adapt from interactions.

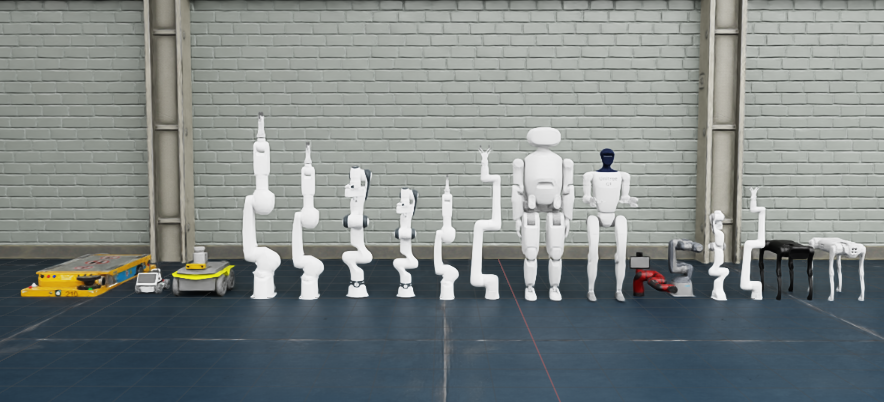

Unlike traditional automation, physical AI marks the creation of next-generation robotics with action-based artificial intelligence in autonomous mobile robots, autonomous guided vehicles, drones, mobile manipulator arms, quadrupeds, and humanoids.

What drives physical AI

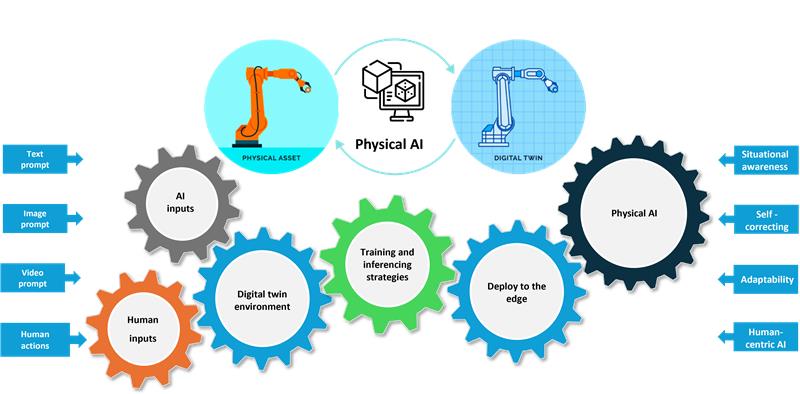

Physical AI merges real-world robotics with digital twins in a continuous feedback loop.

It integrates AI inputs from text, images, videos, and human input through teleoperation and human actions to perform training and inference in a virtual environment, enabling safe, cost-effective experimentation before deployment.

The trained models, policies, and weights are then pushed to edge devices like physical robots, where they operate with minimal latency. Key capabilities such as situational awareness, self-correction, and adaptability are all guided by a human-centric design that emphasizes collaboration between people and machines. Continuously cycling data between digital and physical realms ensures ongoing learning and improvement, ultimately bridging the gap between simulation and real-world execution.

Embodied intelligence

Embodied intelligence is the heart and soul of physical AI.

The framework leans into embodied intelligence, an evolutionary shift in AI. Starting with LLMs that handle text, the next stage, VLMs incorporates visual understanding. The journey culminates in vision-language-action (VLA) models, where AI interprets multimodal data and executes real-time actions.

Newer learning approaches

The DeepSeek effect is transforming the way we acquire knowledge.

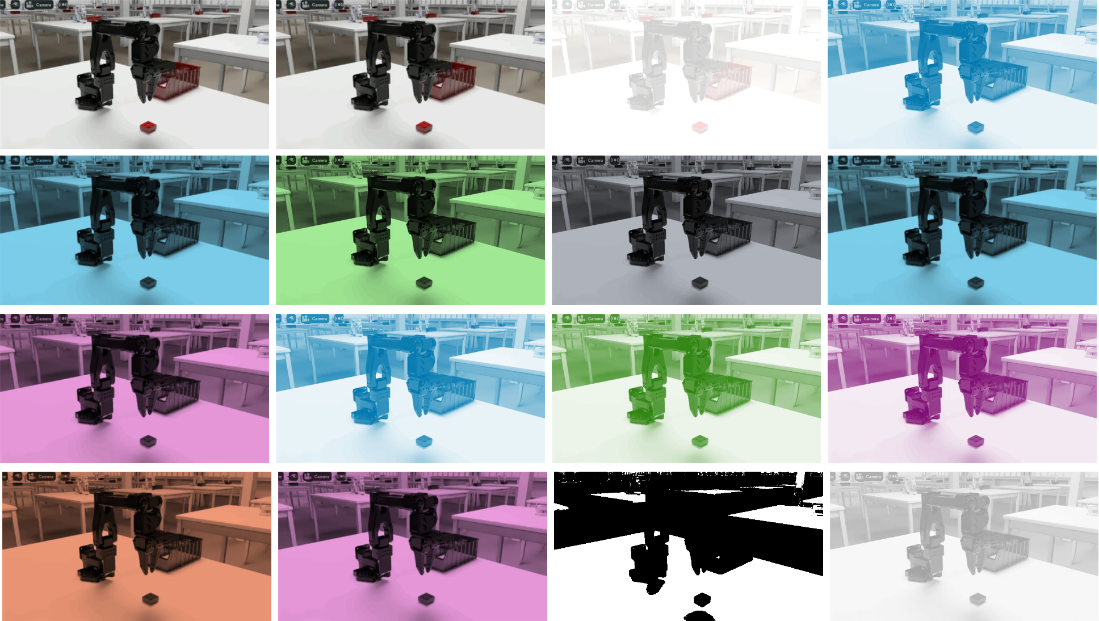

Embodied intelligence requires extensive simulation-based learning approaches, supervised or unsupervised, to safely adapt to the complex real-world environment. The most prominent and preferred ones are reinforcement learning and imitation learning, which take a reasoning first, knowledge next approach, rather than a knowledge first, reasoning next approach.

Reinforcement learning: Learn, fail, and succeed

In reinforcement learning, robots learn optimal behaviors and strategies through trial and error. In an open 3D digital environment such as the NVIDIA ISAAC Gym, hundreds of robots perform certain actions (like walking down the stairs), where each action rewards and penalizes the robot.

Reward points allow robots to continue to act, and penalties discourage the course of action, prompting an alternate course. New scenarios are presented in this continuous learning approach, helping robots adapt to any environment. After training, the learnings (updated policies) are deployed onto real robots.

Imitation learning: Observe, practice, and succeed

Using imitation learning, robots learn to perform complex tasks by mimicking expert reference behaviors, unlike trial and error. It is a supervised learning problem, where the expert’s actions are recorded, and in it, the robot infers the underlying reward function that the expert appears to optimize, a form of inverse reinforcement learning. Varying scenarios can then be presented using 3D simulation environments such as the NVIDIA ISAAC Gym to practice and further refine the behavior.

Imitation learning will be a key component of capturing tacit knowledge from experienced workers and converting that into actionable insights for enterprises whilst addressing skill gap issues and an aging workforce.

Breakthrough applications: Bridging the gap between imagination and reality

Physical AI is set to redefine every aspect of motion-driven technology.

From drones revolutionizing logistics and agriculture to AI-powered exoskeletons enhancing human mobility, the fusion of AI with the physical world will create unprecedented levels of autonomy, adaptability, and intelligence. As machines evolve to perceive, learn, and interact with their surroundings in real time, the boundaries between digital and physical realms will blur, ushering in a future where automation is not just smart but seamlessly integrated into daily life. Some of the breakthrough applications already in the testing phase include:

Humanoid co-worker:

Manufacturing assemblies (such as BIW, TRIM line, and process) often require workers to perform complex tasks that become tacit knowledge with experience demanding incredible dexterity. Yet, the nature of work sometimes results in errors, incidents, and difficulty adapting to fast-changing production requirements.

- Human-machine collaboration: Cobots (collaborative robots) work alongside humans in a shared space, greatly enhancing productivity and ensuring error-free task executions.

- Industrial automation: Autonomous robots can self-initiate and execute complex tasks (such as inventory sorting, dexterous assemblage, and material movement).

Remote teleoperation:

In medical procedures, surgeons aim for precision in delicate surgeries. In manufacturing warehouses, inventory managers aim for faster picking, packing, and assembly irrespective of payload. In any case, the goal is to enhance precision, flexibility, and safety, especially when interacting with environments that are hazardous, difficult to access, or too complex for direct human operation.

- Remote AI: Operators can interact with machines or robots remotely using AI-assisted autonomy for a guided approach.

- Haptics: Operators can feel the movements and forces that the machine or robot experiences, hence improving the sense of presence and control.

Disaster response quadrupeds:

In construction, a simple misalignment can cause structural collapse, trapping workers under debris for hours. In nuclear plants, a critical situation can lead to a fallout engulfing miles of radius under heavy radiation. In such situations, even rescuers face uncertainty about their safety.

- Navigation in rough terrain: Quadrupeds, drones, and other agile robots can easily navigate difficult terrains otherwise hazardous for humans and perform rescue actions.

- Environmental monitoring: Mobile robots can swiftly patrol and identify active or potential hazards with early warning and mitigation systems.

The future with physical AI is nothing short of revolutionary, where intelligent machines seamlessly integrate into our daily lives, industries, and critical operations. As AI-powered robots continue to evolve, they will unlock unprecedented levels of autonomy, efficiency, and collaboration across sectors. The dawn of physical AI is here, and it promises a world where imagination and reality converge to shape a smarter, safer, and dynamic future.